Part 2 - Run whisper on external server

Run whisper on external server.Wyoming server for faster-whisper.

It does nothing more than take voice and convert it to text.

First You need to install STT on a device that can handle the speech recognition processing. This could be the a separate server.

You must have found a suitable Whisper Container on Docker hub.

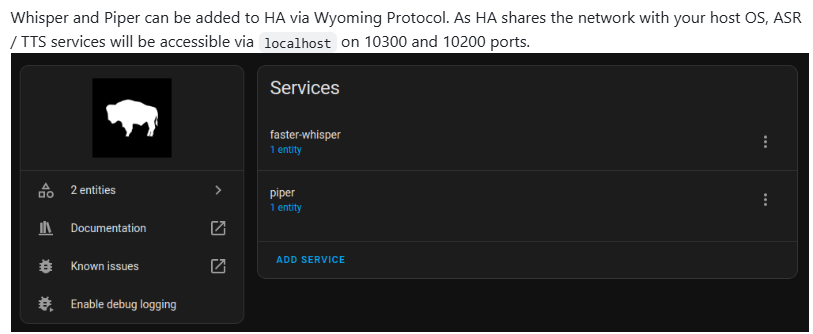

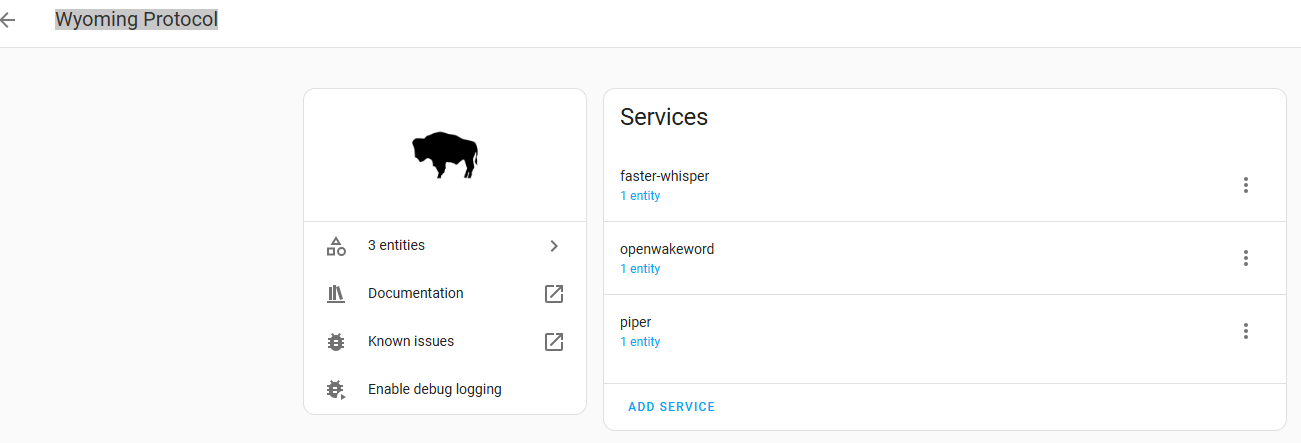

Next Integrate with Home Assistant: Use Home Assistant's wyoming protocol Integration to send the transcribed text from STT to Home Assistant.

I could easily integrate remote rhasspy/Wyoming-Whisper on vultr to my HomeAssistant installed on a rpi4.

Note

Whisper works but it is slow (also around 15 seconds).

Would be great if it would support GPU, that would make it way faster.

Table of Contents

Images option

https://github.com/OpenAI/whisper

https://github.com/SYSTRAN/faster-whisper

https://github.com/rhasspy/wyoming-faster-whisper ==> docker pull docker pull rhasspy/wyoming-whisper

or

Whisper Addon

This add-on uses wyoming-faster-whisper

This wyoming-faster-whisper uses SYSTRAN/faster-whisper

This SYSTRAN/faster-whisper uses OpenAI/whisper

I think that the wyoming protocol of wyoming-faster-whisper may be different from original whisper.

Download and set up the Whisper Standalone application from this repository(https://github.com/rhasspy/wyoming-faster-whisper).

~# docker search wyoming-whisperoutput

NAME DESCRIPTION STARS OFFICIAL

rhasspy/wyoming-whisper Wyoming protocol server for faster-whisper s… 10

abtools/wyoming-whisper-cuda wyoming-whisper compiled with CUDA support f… 0

pando85/wyoming-whisper Wyoming protocol server for faster whisper s… 0

rhasspy/wyoming-whisper-cpp 0

dwyschka/wyoming-whisper-cuda 0

slackr31337/wyoming-whisper-gpu https://github.com/slackr31337/wyoming-whisp… 0

grubertech/wyoming-whisper fast-whisper with large-v3 model built for G… 0

confusedengineer/wyoming-whisper-gpu Github: https://github.com/Confused-Enginee… 0

robomagus/wyoming-whisper-rk3588 0

clonyara/wyoming-whisper 0

xiaozhch5/wyoming-whisper

Run the container

https://github.com/rhasspy/wyoming-faster-whisper

docker run -it -p 10300:10300 -v /path/to/local/data:/data rhasspy/wyoming-whisper \

--model tiny-int8 --language en

This is work fine.

docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

717fe1966138 yaming116/sherpa-onnx-asr:latest "python app.py" 2 weeks ago Up 7 days 5001/tcp, 0.0.0.0:10700->10700/tcp, :::10700->10700/tcp sherpa-onnx-asr

1ee50dbb7a33 rhasspy/wyoming-piper "bash /run.sh --voic…" 2 weeks ago Up 7 days 0.0.0.0:10200->10200/tcp, :::10200->10200/tcp nice_hofstadter

01b832193e61 rhasspy/wyoming-openwakeword "bash /run.sh --prel…" 2 weeks ago Up 7 days 0.0.0.0:10400->10400/tcp, :::10400->10400/tcp gallant_engelbart

0389595d9f22 rhasspy/wyoming-whisper "bash /run.sh --mode…" 2 weeks ago Up 18 hours 0.0.0.0:10300->10300/tcp, :::10300->10300/tcp nostalgic_jang

docker inspect 0389595d9f22

output

docker inspect 0389595d9f22

[

{

"Id": "0389595d9f22b751ee07ac9380389192713979a6c7f2b3f11e48c15505055bf0",

"Created": "2025-01-04T07:35:36.285820254Z",

"Path": "bash",

"Args": [

"/run.sh",

"--model",

"tiny-int8",

"--language",

"en"

],

"State": {

"Status": "running",

"Running": true,

"Paused": false,

"Restarting": false,

"OOMKilled": false,

"Dead": false,

"Pid": 82152,

"ExitCode": 0,

"Error": "",

"StartedAt": "2025-01-23T19:30:21.636452101Z",

"FinishedAt": "2025-01-17T13:24:28.786242942Z"

},

"Image": "sha256:07c182a447fb456911f2202293b43868ef9bbbfe48aa06c4067891e2a6c2ea53",

"ResolvConfPath": "/var/lib/docker/containers/0389595d9f22b751ee07ac9380389192713979a6c7f2b3f11e48c15505055bf0/resolv.conf",

"HostnamePath": "/var/lib/docker/containers/0389595d9f22b751ee07ac9380389192713979a6c7f2b3f11e48c15505055bf0/hostname",

"HostsPath": "/var/lib/docker/containers/0389595d9f22b751ee07ac9380389192713979a6c7f2b3f11e48c15505055bf0/hosts",

"LogPath": "/var/lib/docker/containers/0389595d9f22b751ee07ac9380389192713979a6c7f2b3f11e48c15505055bf0/0389595d9f22b751ee07ac9380389192713979a6c7f2b3f11e48c15505055bf0-json.log",

"Name": "/nostalgic_jang",

"RestartCount": 0,

"Driver": "overlay2",

"Platform": "linux",

"MountLabel": "",

"ProcessLabel": "",

"AppArmorProfile": "docker-default",

"ExecIDs": null,

"HostConfig": {

"Binds": [

"/path/to/local/data:/data"

],

"ContainerIDFile": "",

"LogConfig": {

"Type": "json-file",

"Config": {}

},

"NetworkMode": "default",

"PortBindings": {

"10300/tcp": [

{

"HostIp": "",

"HostPort": "10300"

}

]

},

"RestartPolicy": {

"Name": "no",

"MaximumRetryCount": 0

},

"AutoRemove": false,

"VolumeDriver": "",

"VolumesFrom": null,

"ConsoleSize": [

24,

151

],

"CapAdd": null,

"CapDrop": null,

"CgroupnsMode": "private",

"Dns": [],

"DnsOptions": [],

"DnsSearch": [],

"ExtraHosts": null,

"GroupAdd": null,

"IpcMode": "private",

"Cgroup": "",

"Links": null,

"OomScoreAdj": 0,

"PidMode": "",

"Privileged": false,

"PublishAllPorts": false,

"ReadonlyRootfs": false,

"SecurityOpt": null,

"UTSMode": "",

"UsernsMode": "",

"ShmSize": 67108864,

"Runtime": "runc",

"Isolation": "",

"CpuShares": 0,

"Memory": 0,

"NanoCpus": 0,

"CgroupParent": "",

"BlkioWeight": 0,

"BlkioWeightDevice": [],

"BlkioDeviceReadBps": [],

"BlkioDeviceWriteBps": [],

"BlkioDeviceReadIOps": [],

"BlkioDeviceWriteIOps": [],

"CpuPeriod": 0,

"CpuQuota": 0,

"CpuRealtimePeriod": 0,

"CpuRealtimeRuntime": 0,

"CpusetCpus": "",

"CpusetMems": "",

"Devices": [],

"DeviceCgroupRules": null,

"DeviceRequests": null,

"MemoryReservation": 0,

"MemorySwap": 0,

"MemorySwappiness": null,

"OomKillDisable": null,

"PidsLimit": null,

"Ulimits": null,

"CpuCount": 0,

"CpuPercent": 0,

"IOMaximumIOps": 0,

"IOMaximumBandwidth": 0,

"MaskedPaths": [

"/proc/asound",

"/proc/acpi",

"/proc/kcore",

"/proc/keys",

"/proc/latency_stats",

"/proc/timer_list",

"/proc/timer_stats",

"/proc/sched_debug",

"/proc/scsi",

"/sys/firmware",

"/sys/devices/virtual/powercap"

],

"ReadonlyPaths": [

"/proc/bus",

"/proc/fs",

"/proc/irq",

"/proc/sys",

"/proc/sysrq-trigger"

]

},

"GraphDriver": {

"Data": {

"LowerDir": "/var/lib/docker/overlay2/a0b16b408aa929012f30886d7a2fcfd0786637961f3a0e844ff7ebbfdf8084e9-init/diff:/var/lib/docker/overlay2/cd058de8a3e532b248d6828a93de3515596f2692b0c4bf465d0259a1c3a3878e/diff:/var/lib/docker/overlay2/bdb5a9796506747e1b2e98e4124a848f5841b35c744d11b310afe2596f858499/diff:/var/lib/docker/overlay2/a09cd2d9fc51d90ef7c321e29f014003aed701f2b16c82ca13e42cfb9d78b0c6/diff:/var/lib/docker/overlay2/8abe9e4a501483cb1fa5009290ca085015f6529e50e3a3414d90ba3a9c20524e/diff",

"MergedDir": "/var/lib/docker/overlay2/a0b16b408aa929012f30886d7a2fcfd0786637961f3a0e844ff7ebbfdf8084e9/merged",

"UpperDir": "/var/lib/docker/overlay2/a0b16b408aa929012f30886d7a2fcfd0786637961f3a0e844ff7ebbfdf8084e9/diff",

"WorkDir": "/var/lib/docker/overlay2/a0b16b408aa929012f30886d7a2fcfd0786637961f3a0e844ff7ebbfdf8084e9/work"

},

"Name": "overlay2"

},

"Mounts": [

{

"Type": "bind",

"Source": "/path/to/local/data",

"Destination": "/data",

"Mode": "",

"RW": true,

"Propagation": "rprivate"

}

],

"Config": {

"Hostname": "0389595d9f22",

"Domainname": "",

"User": "",

"AttachStdin": true,

"AttachStdout": true,

"AttachStderr": true,

"ExposedPorts": {

"10300/tcp": {}

},

"Tty": true,

"OpenStdin": true,

"StdinOnce": true,

"Env": [

"PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin"

],

"Cmd": [

"--model",

"tiny-int8",

"--language",

"en"

],

"Image": "rhasspy/wyoming-whisper",

"Volumes": null,

"WorkingDir": "/",

"Entrypoint": [

"bash",

"/run.sh"

],

"OnBuild": null,

"Labels": {}

},

"NetworkSettings": {

"Bridge": "",

"SandboxID": "0ab0205986917126b832d57fd78aa7f344900f8fa215c135662266aedba94e83",

"HairpinMode": false,

"LinkLocalIPv6Address": "",

"LinkLocalIPv6PrefixLen": 0,

"Ports": {

"10300/tcp": [

{

"HostIp": "0.0.0.0",

"HostPort": "10300"

},

{

"HostIp": "::",

"HostPort": "10300"

}

]

},

"SandboxKey": "/var/run/docker/netns/0ab020598691",

"SecondaryIPAddresses": null,

"SecondaryIPv6Addresses": null,

"EndpointID": "164817e434e5fc4c67db24dfeb63738c0982d87e715916e71ff33b7e588b7bf6",

"Gateway": "172.17.0.1",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"IPAddress": "172.17.0.5",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"MacAddress": "02:42:ac:11:00:05",

"Networks": {

"bridge": {

"IPAMConfig": null,

"Links": null,

"Aliases": null,

"NetworkID": "00ceca59d76906efdaaa76c371b716ff489bed887cb9a5f023855fd076812873",

"EndpointID": "164817e434e5fc4c67db24dfeb63738c0982d87e715916e71ff33b7e588b7bf6",

"Gateway": "172.17.0.1",

"IPAddress": "172.17.0.5",

"IPPrefixLen": 16,

"IPv6Gateway": "",

"GlobalIPv6Address": "",

"GlobalIPv6PrefixLen": 0,

"MacAddress": "02:42:ac:11:00:05",

"DriverOpts": null

}

}

}

}

]

Using it in Home Assistant

The Home Assistant requires that the Wyoming Wshiper Server is exposed. You can set that in the settings.

Settings->Integrations->Add Integration: Add the wyoming protocol and add the IP of wherever you are running Wyoming Wshiper Standalone. The port is 10300

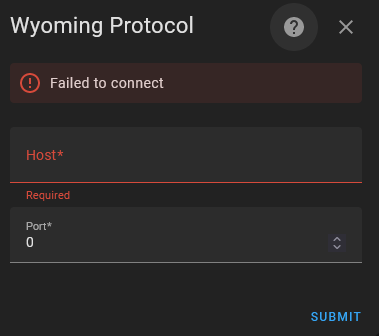

When Add the wyoming protocol Integration for the first time you are asked for some information about the Wyoming Wshiper Server.

Once I had these containers running, I added the Whisper in the Home Assistant interface using my Whisper Docker host network’s IP address and ports 10300.

You have to simply enter the ip address and port of a wyoming application such as whisper.

When you press “Submit” HA will detect the application,They then appeared as entities in the Wyoming Protocol integration.

Where model save?

STT Models are automatically downloaded from https://huggingface.co and put into /path/to/local/data.

docker run -it -p 10300:10300 -v /path/to/local/data:/data rhasspy/wyoming-whisper \

--model medium-int8 --language en

output

config.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2.02k/2.02k [00:00<00:00, 49.7kB/s]

vocabulary.txt: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 460k/460k [00:00<00:00, 3.49MB/s]

model.bin: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 785M/785M [00:21<00:00, 37.1MB/s]

tokenizer.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2.48M/2.48M [00:01<00:00, 1.79MB/s]

INFO:__main__:Ready

docker run -it -p 10300:10300 -v /path/to/local/data:/data rhasspy/wyoming-whisper --model large --language en

preprocessor_config.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 340/340 [00:00<00:00, 7.51kB/s]

config.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2.39k/2.39k [00:00<00:00, 68.2kB/s]

vocabulary.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.07M/1.07M [00:00<00:00, 6.91MB/s]

tokenizer.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 2.48M/2.48M [00:00<00:00, 14.1MB/s]

model.bin: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 3.09G/3.09G [01:13<00:00, 42.3MB/s]

/run.sh: line 5: 7 Killed python3 -m wyoming_faster_whisper --uri 'tcp://0.0.0.0:10300' --data-dir /data --download-dir /data "$@"█████| 2.48M/2.48M [00:00<00:00, 14.9MB/s]

Each STT Model has its own strengths and weaknesses, particularly when it comes to accuracy and execution reliability.

root@vultr:~# cd /path/to/local/data

root@vultr:/path/to/local/data# tree -L 3

.

├── en_US-amy-low.onnx

├── en_US-amy-low.onnx.json

├── en_US-amy-medium.onnx

├── en_US-amy-medium.onnx.json

├── en_US-joe-medium.onnx

├── en_US-joe-medium.onnx.json

├── en_US-lessac-high.onnx

├── en_US-lessac-high.onnx.json

├── en_US-lessac-medium.onnx

├── en_US-lessac-medium.onnx.json

├── en_US-ryan-high.onnx

├── en_US-ryan-high.onnx.json

├── models--rhasspy--faster-whisper-medium-int8

│ ├── blobs

│ │ ├── c9074644d9d1205686f16d411564729461324b75

│ │ ├── e19ba3e83e68b15d480def8185b07fc8af56caa15bb8470f2c47ec8c1faaec44

│ │ └── e86f0ed3287af742920a8ef37577c347a1a8153b

│ ├── refs

│ │ └── main

│ └── snapshots

│ └── c9af9dfadcad017757293c10e4478d42a5a06773

├── models--rhasspy--faster-whisper-tiny-int8

│ ├── blobs

│ │ ├── 6fdeb89775be8b3455197e4685fec5d59ce47874de4fb8fb7724a43ea205a7b0

│ │ ├── c9074644d9d1205686f16d411564729461324b75

│ │ └── e86f0ed3287af742920a8ef37577c347a1a8153b

│ ├── refs

│ │ └── main

│ └── snapshots

│ └── 5b6382e0f4ac867ce9ff24aaa249400a7c6c73d9

└── models--Systran--faster-whisper-large-v3

├── blobs

│ ├── 0adcd01e7c237205d593b707e66dd5d7bc785d2d

│ ├── 3a5e2ba63acdcac9a19ba56cf9bd27f185bfff61

│ ├── 69f74147e3334731bc3a76048724833325d2ec74642fb52620eda87352e3d4f1

│ ├── 75336feae814999bae6ccccdecf177639ffc6f9d

│ └── 931c77a740890c46365c7ae0c9d350ba3cca908f

├── refs

│ └── main

└── snapshots

└── edaa852ec7e145841d8ffdb056a99866b5f0a478

15 directories, 26 files

detail:

https://www.matterxiaomi.com/boards/topic/15742/how-to-manually-install-wyoming-piper-and-whisper-on-home-assistant-core/page/2#60079

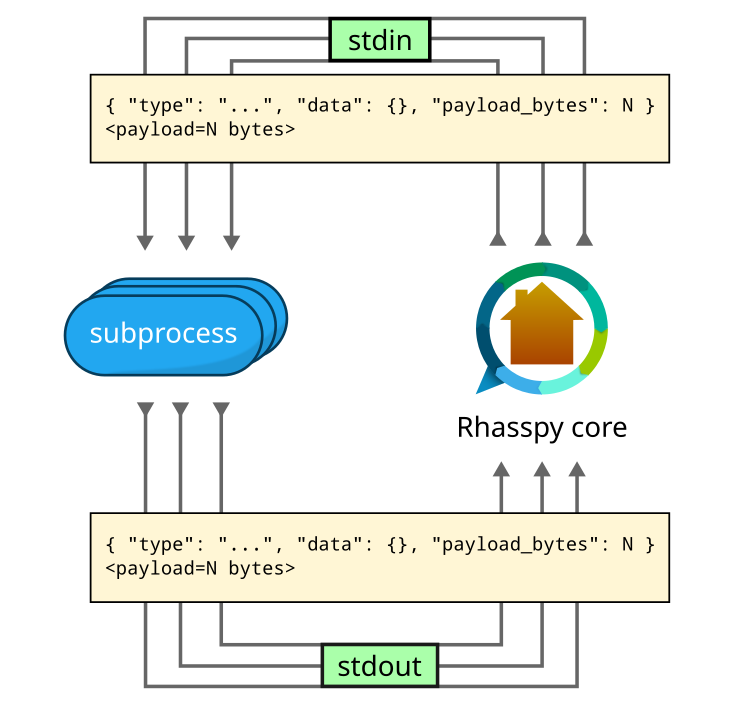

How it works?

Option

way 1. desktop machine

I was also curious to know if whisper add-on will run faster in a desktop machine. Since I did not have a linux server I had to install ubuntu on a VirtualBOX, exposed the virtual machine to my network, installed docker on ubuntu then downloaded whisper. I could easily integrate remote whisper to my HomeAssistant installed on a rpi4. All works as expected. So far all good.

I’m running this and a few other containers on

Debian 11 bullseye

AMD Ryzen 7 3800X

32 GB RAM

You basically need a GPU to enable accurate STT processing with those larger models. CPU processing is too slow.

Wyoming-Whisper in Docker with Nvidia GPU support.

https://github.com/Fraddles/Home-Automation/tree/main/Voice-Assistant

useful links

https://community.home-assistant.io/t/run-whisper-on-external-server/567449

https://developer.ibm.com/tutorials/run-a-single-container-speech-to-text-service-on-docker/

Comments

Comments are closed